My Role | Principal UX Designer, Director

User Personas

Maryia Marketing Manager

"I need a tool that shows me ad performance data with both the viusal creative along with hard numbers."

Graham Graphic Designer

"I put a lot of work into designing my ads and struggle to understand why some do well and other's don't."

Corinna Copywriter

"Different copy paired with different images can impact ad performance."

User Tasks & Scenarios

User Tasks

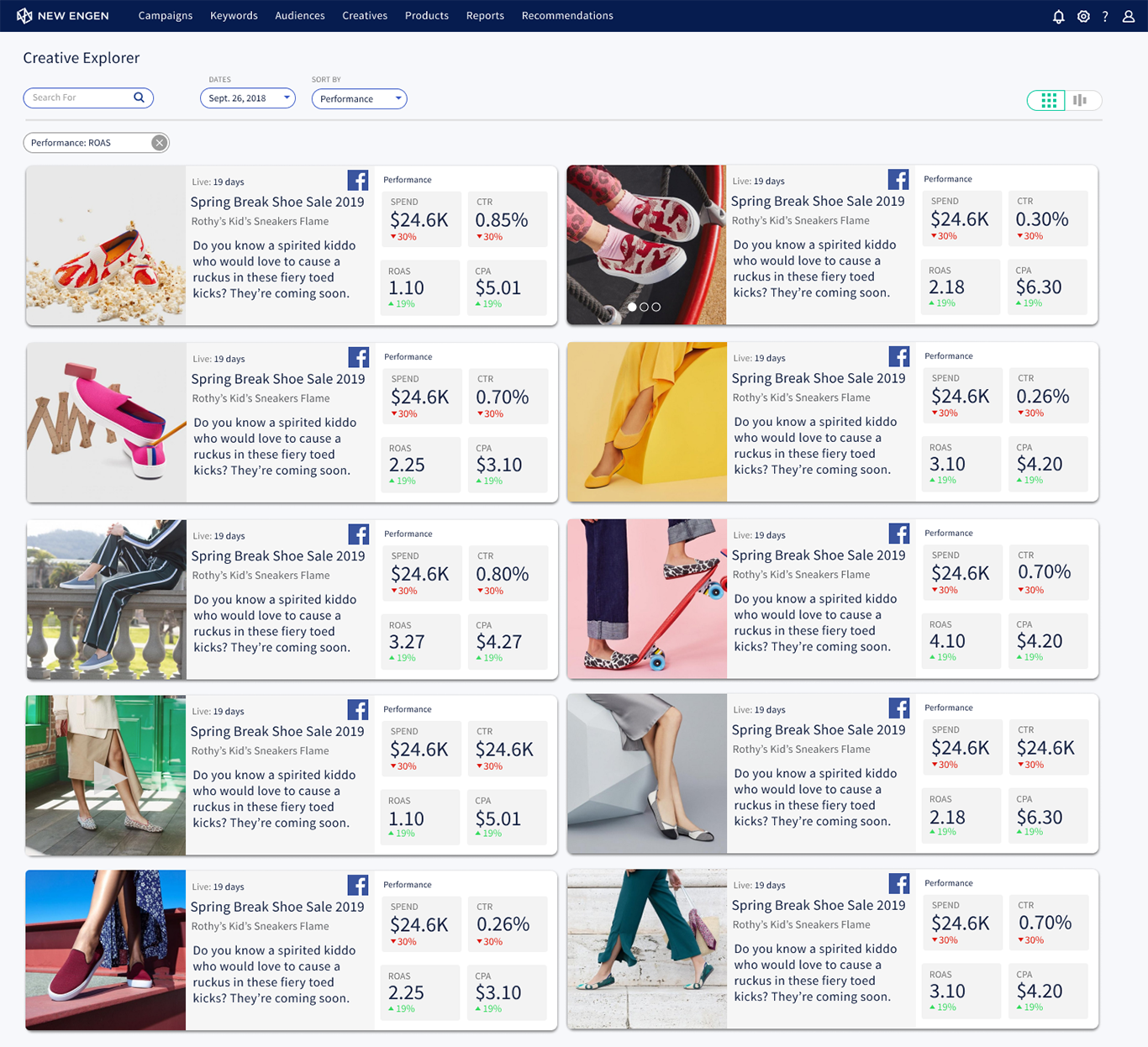

- As a Marketing Manager, I need detailed performance metrics so that, I can demonstrate the ROI of my campaigns to secure future budget allocations.

- As a Marketing Manager, I need access to historical campaign data so that, I can identify trends and make data-driven decisions for upcoming campaigns.

- As a Graphic Designer, I need comparative data on different design versions so that, I can learn from the high-performing ads and replicate their success in the future.

- As a Copywriter, I need insights into which copy and visual pairings resonate best with the audience so that, I can create more effective ad campaigns.

A day in the life of Maryia Marketing Manager

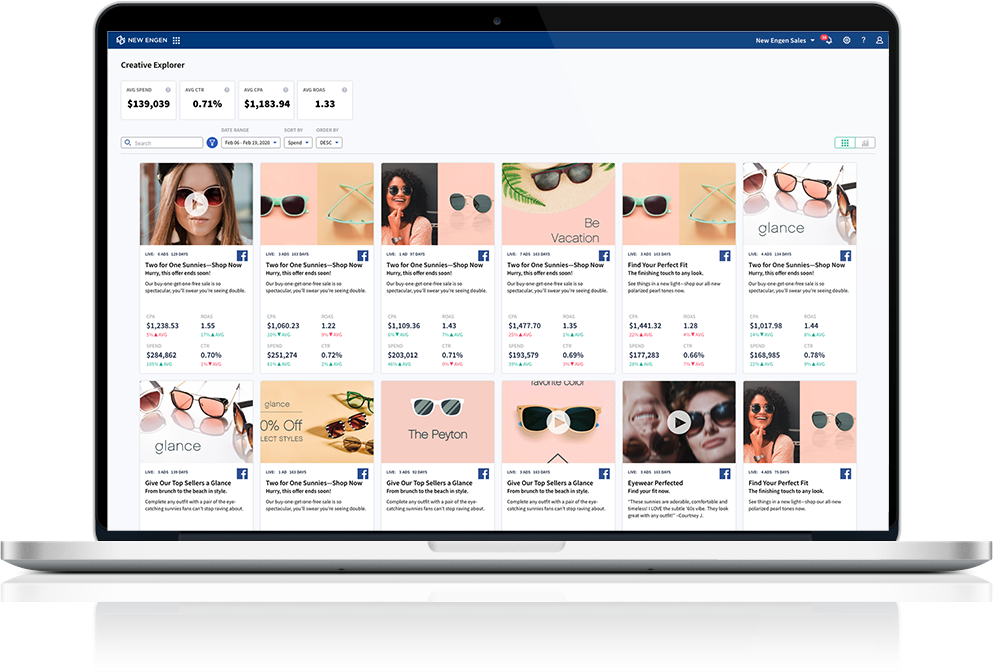

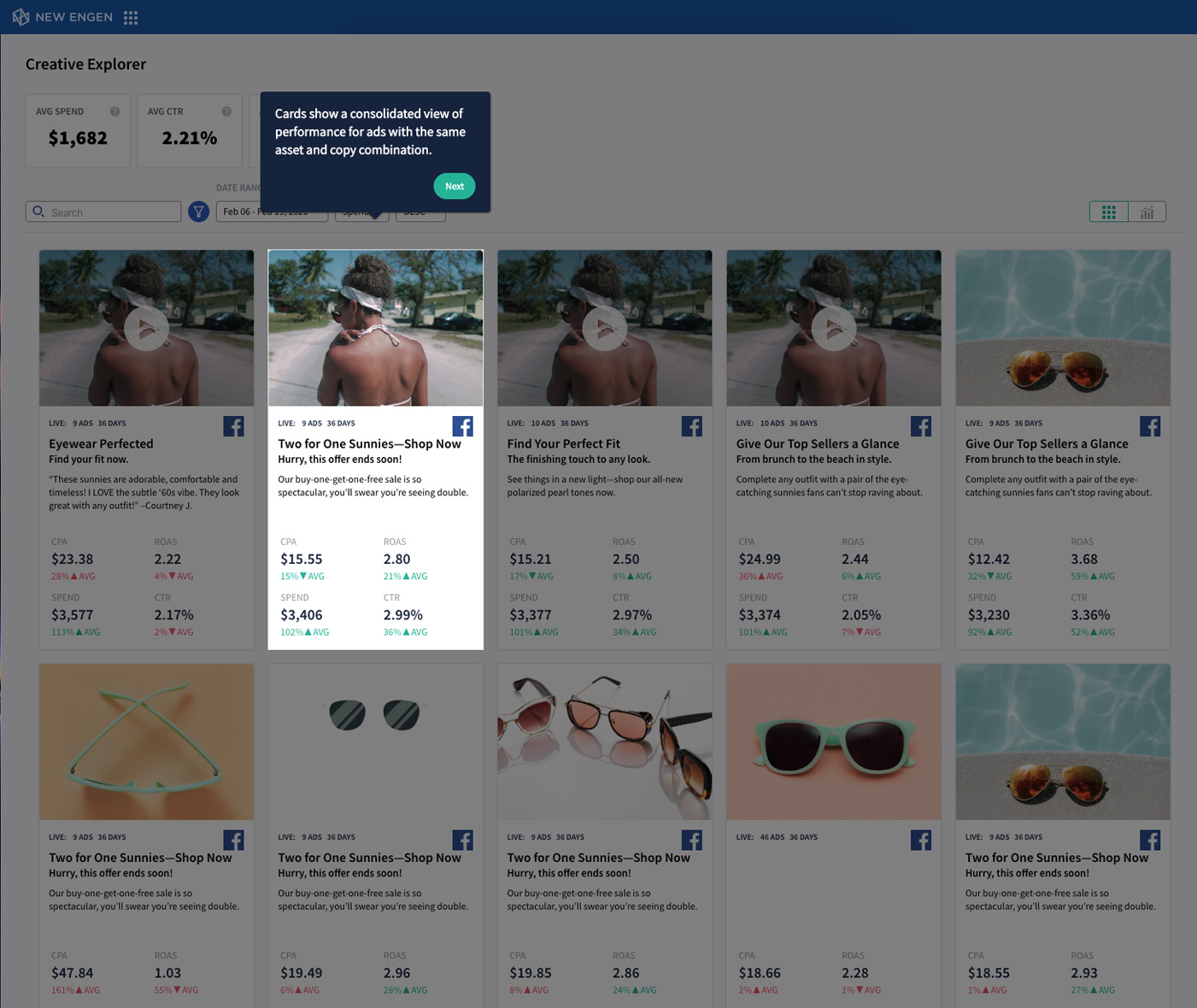

Maryia works for an eyewear company and she just launched a spring break campaign featuring a new line of sunglasses. On her bus ride into work she sips coffee and checks-in on how her digital ad campaigns performed on Facebook yesterday. Maryia prefers to look at the CPA (Total Ad Spend / # of Acquisitions) on her campaigns to determine the "health" of each ad. This morning she is happy to find that the CPA on all ads in the spring launch campaign are up 7.4% and on track to spend to the weekly budget she set.

Once Maryia arrives in the office she opens her laptop and dives deeper into the data. She specifically wants to see how the ads with headline copy containing the word "beach" performed. She notices the CTR is 26% higher on average, so she decides to increase both the bid and spend of these ads.

Maryia looks at the clock and notices she is late to a meeting with her "Google Shopping" counterpart. Once in the meeting she learns that the production facility is having issues and is unexpectedly running out of stock on their most popular frames. Maryia reduces the budget on ads pushing that frame and reallocates to ads that lead customers to the next best selling styles. Maryia repeats this process for her campaigns on Google Display Network and Pinterest, before she knows it, it's already 1:50. She grabs her purse and rushes out the door to try and make it to her favorite salad bar before it closes.

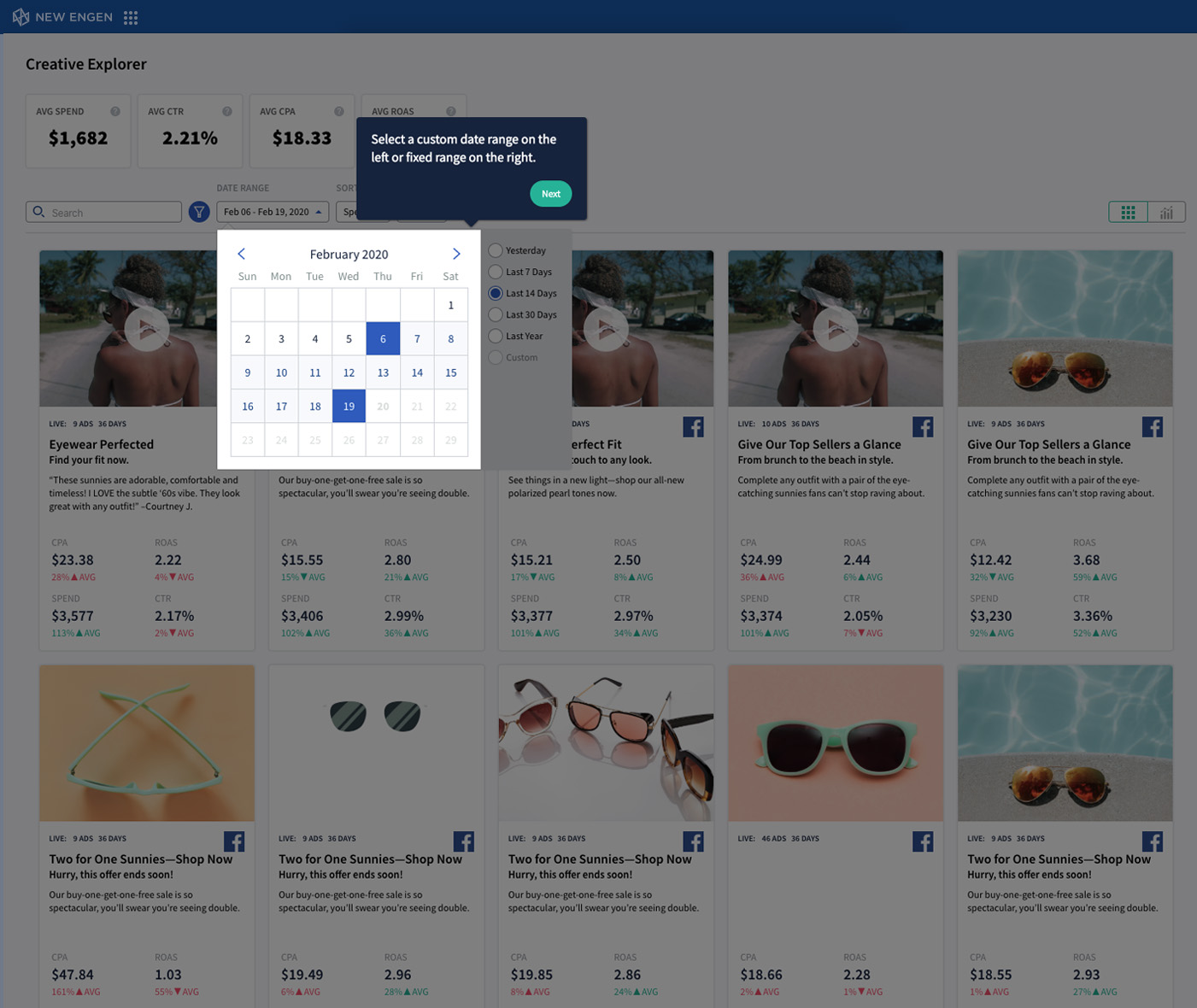

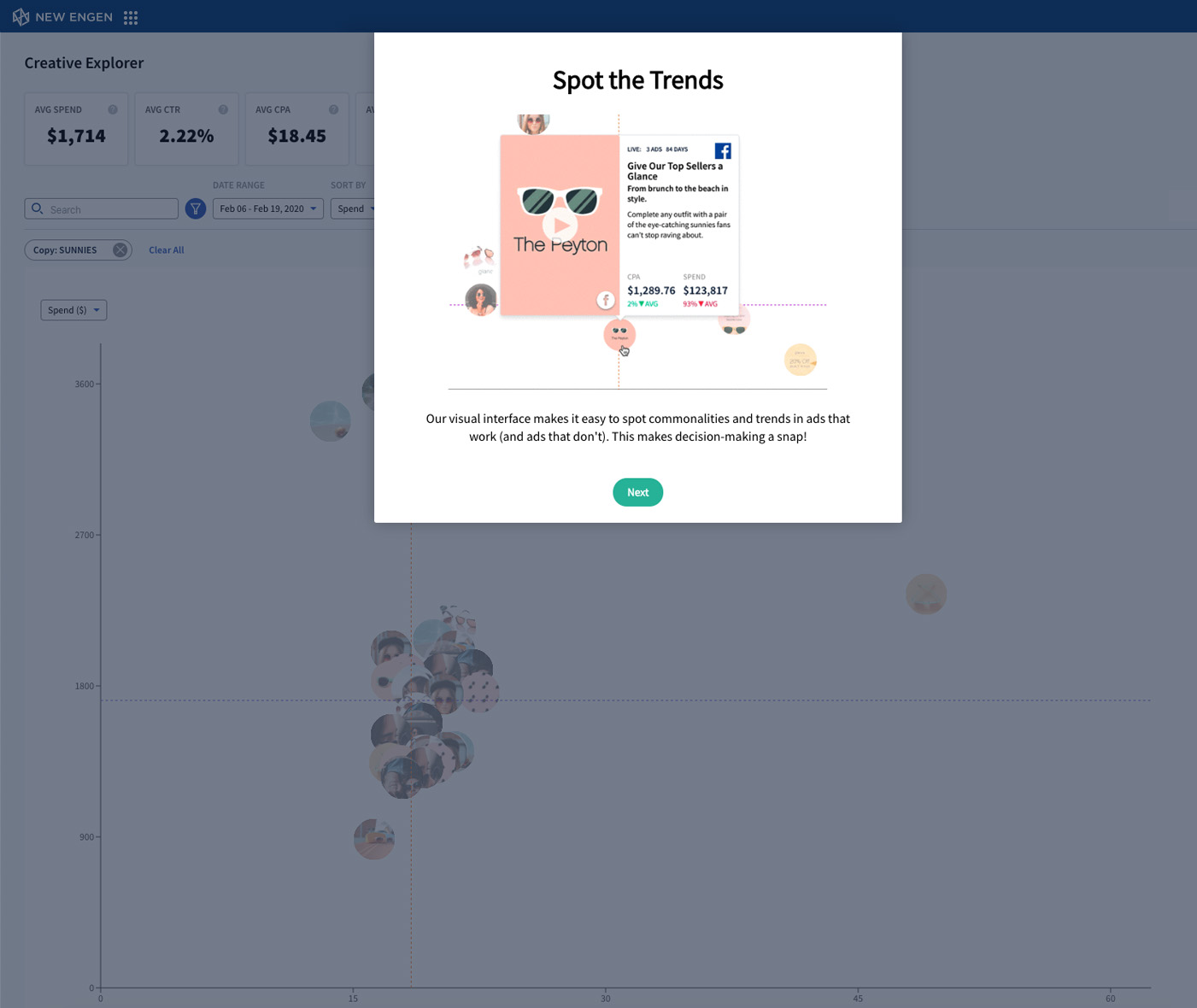

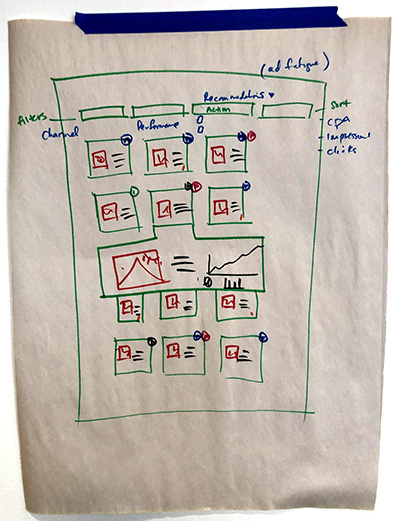

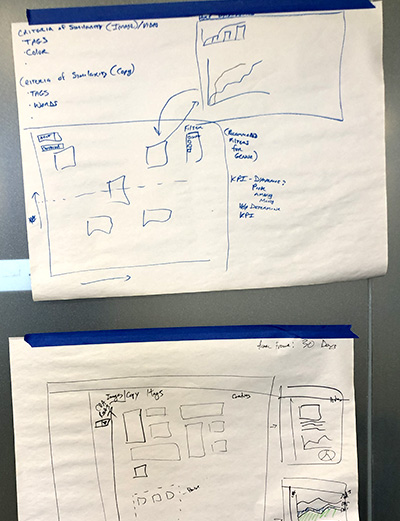

Design Studio

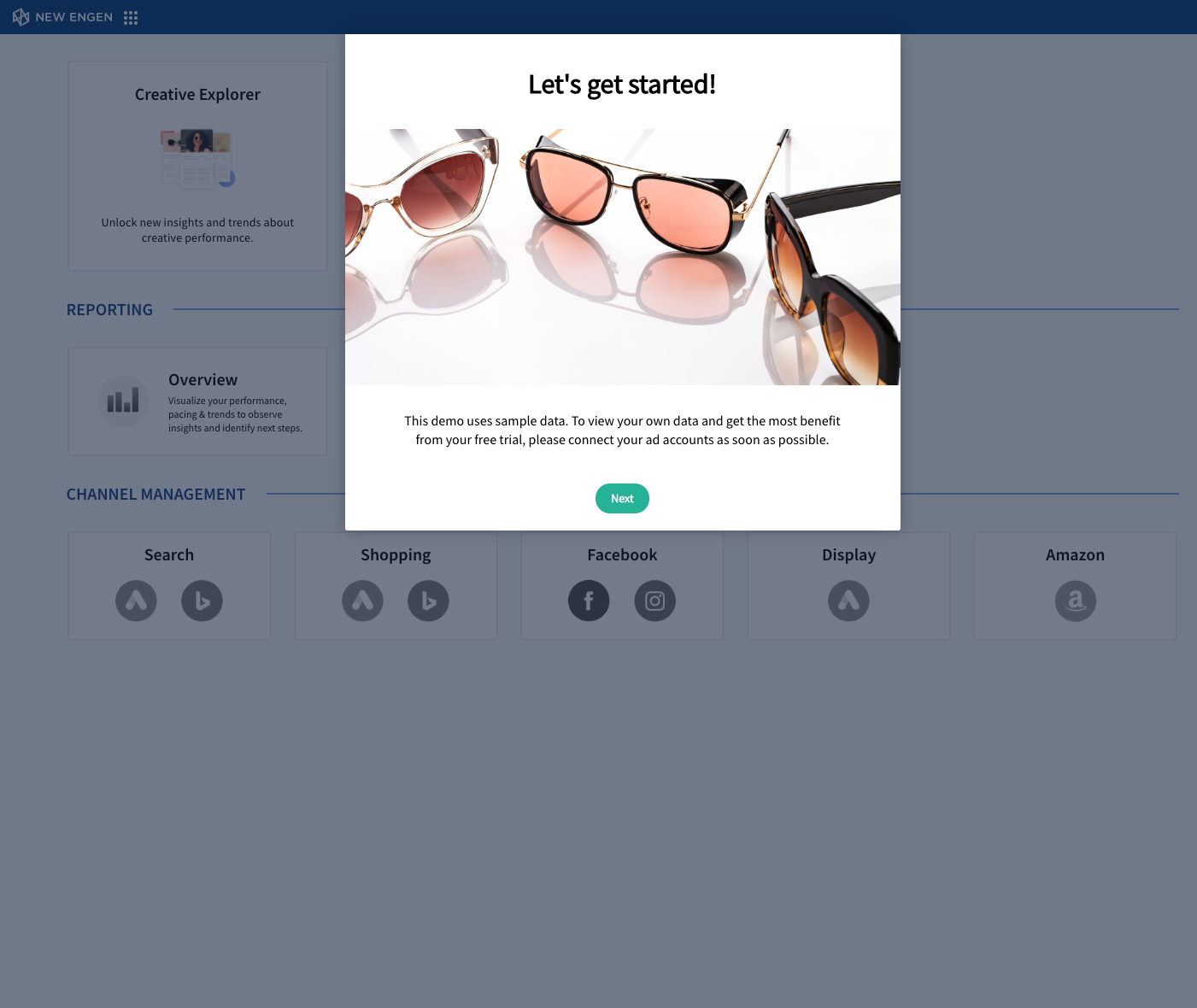

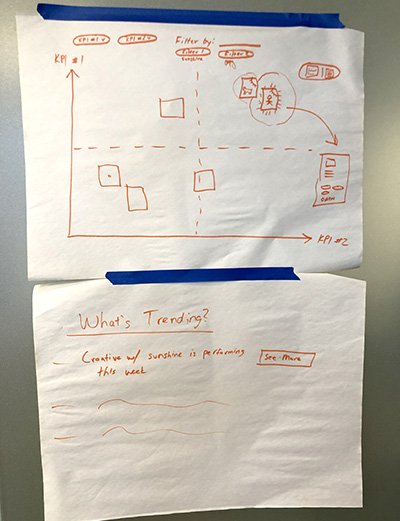

Leveraging our internal subject matter experts to tap into the team's collective knowledge I organized a brainstorming session that was specifically designed to cast the widest net possible.

After sketching and discussing different options we landed on a direction to visually showcase ad performance reporting. Including all stakeholders such as; sales, marketing and engineering gave us insights to the opportunities and costs that we would need to consider when looking at both the interaction details and big picture strategy.

Brainstorming Session Outcomes

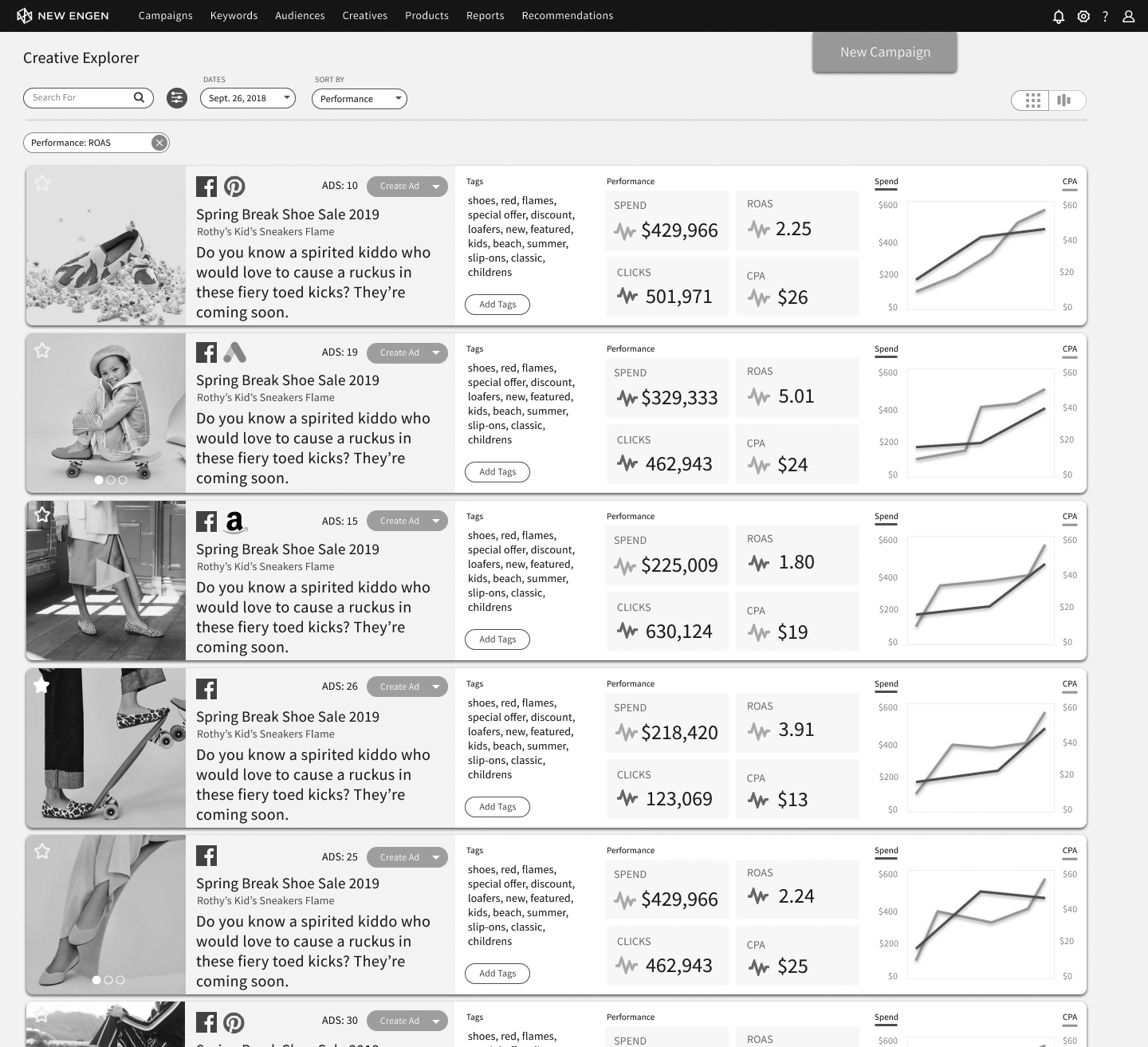

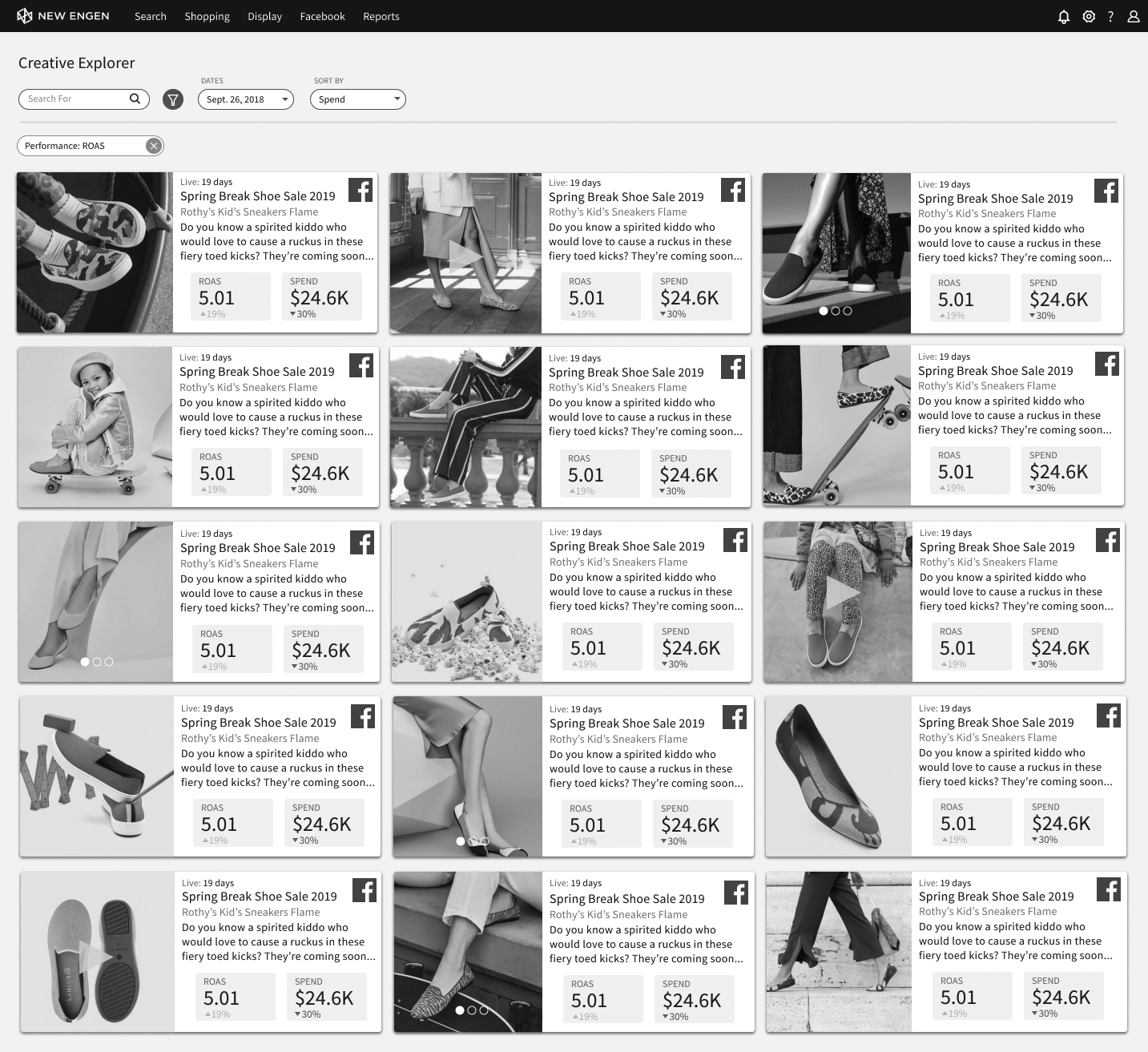

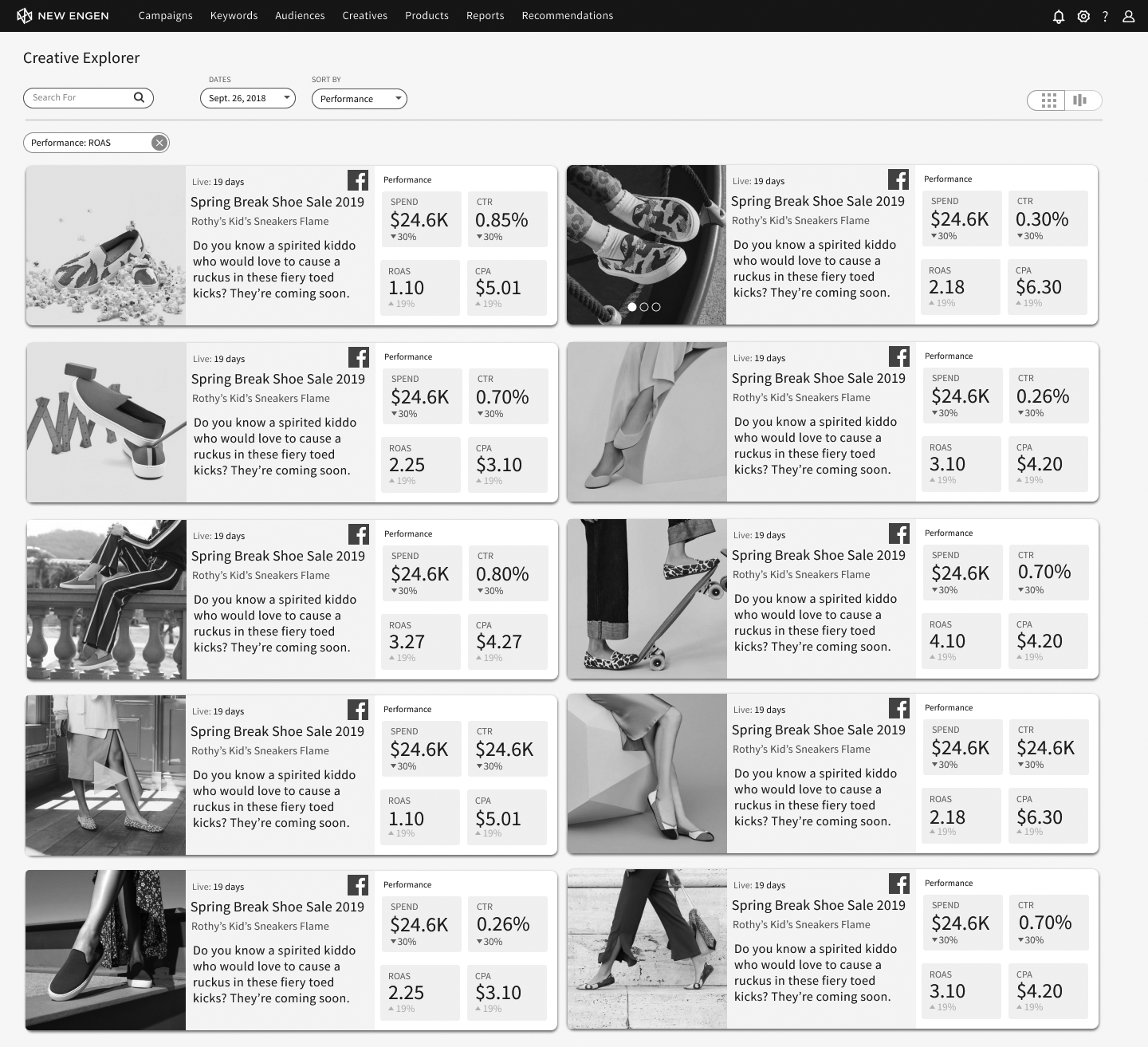

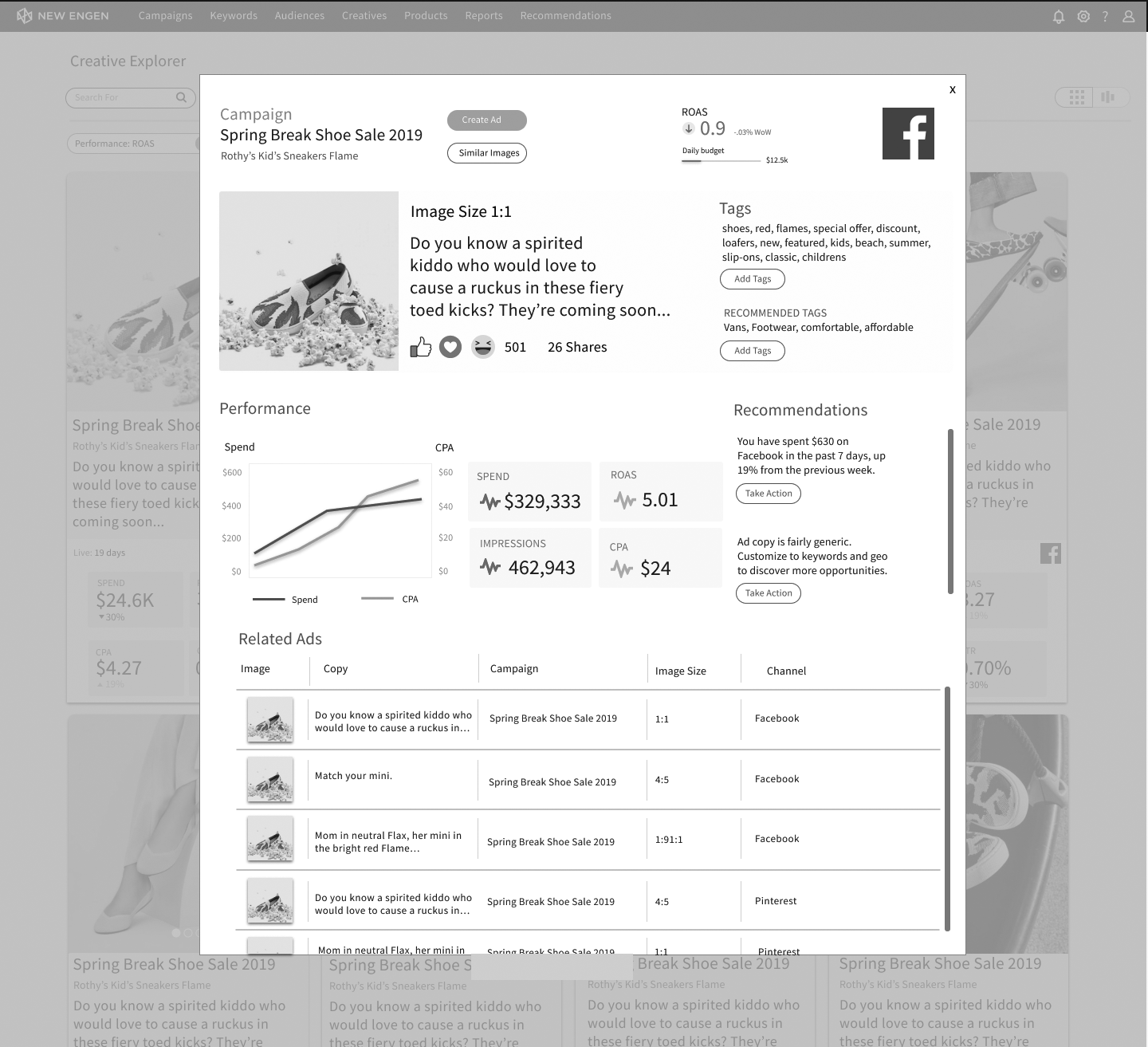

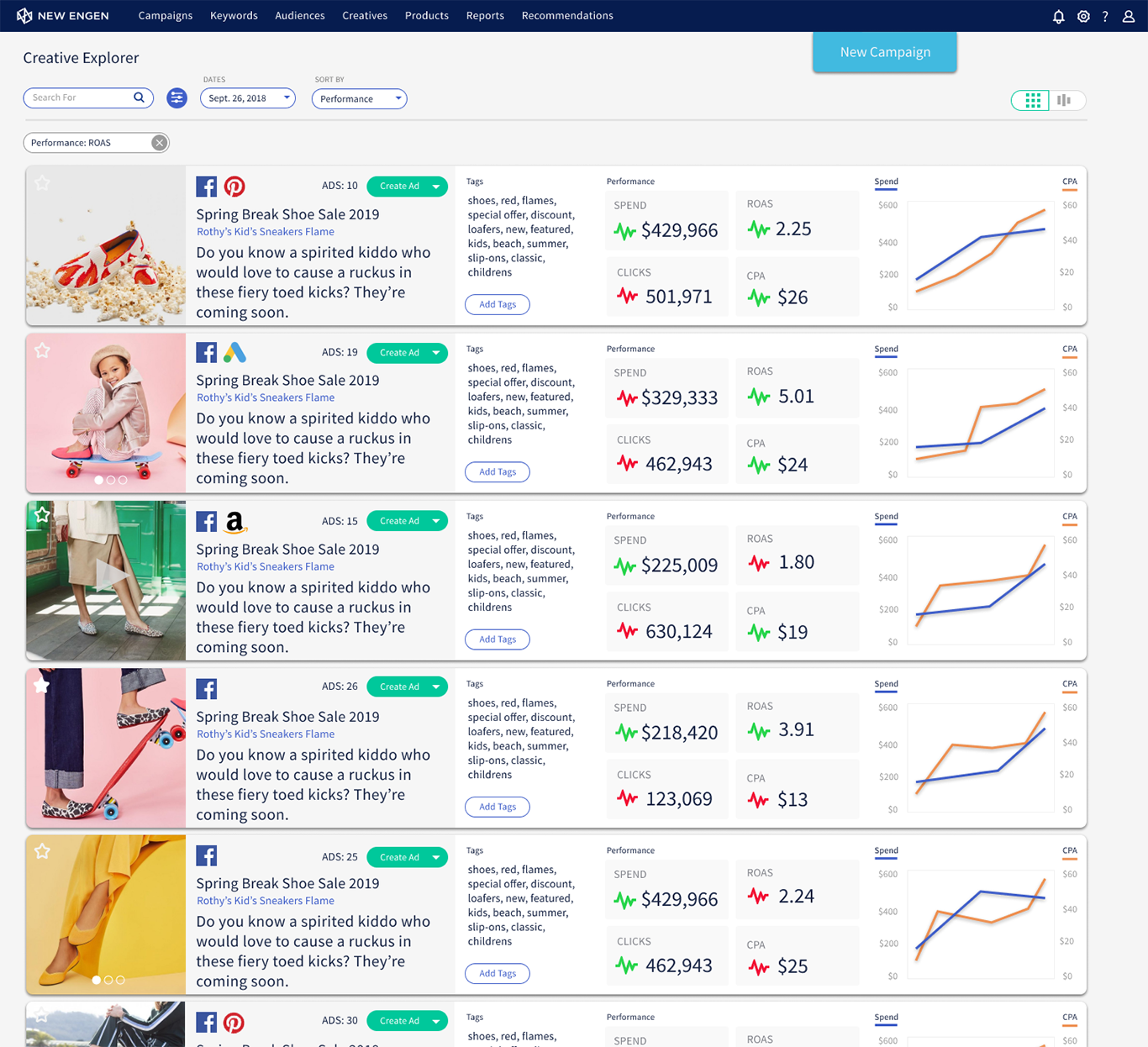

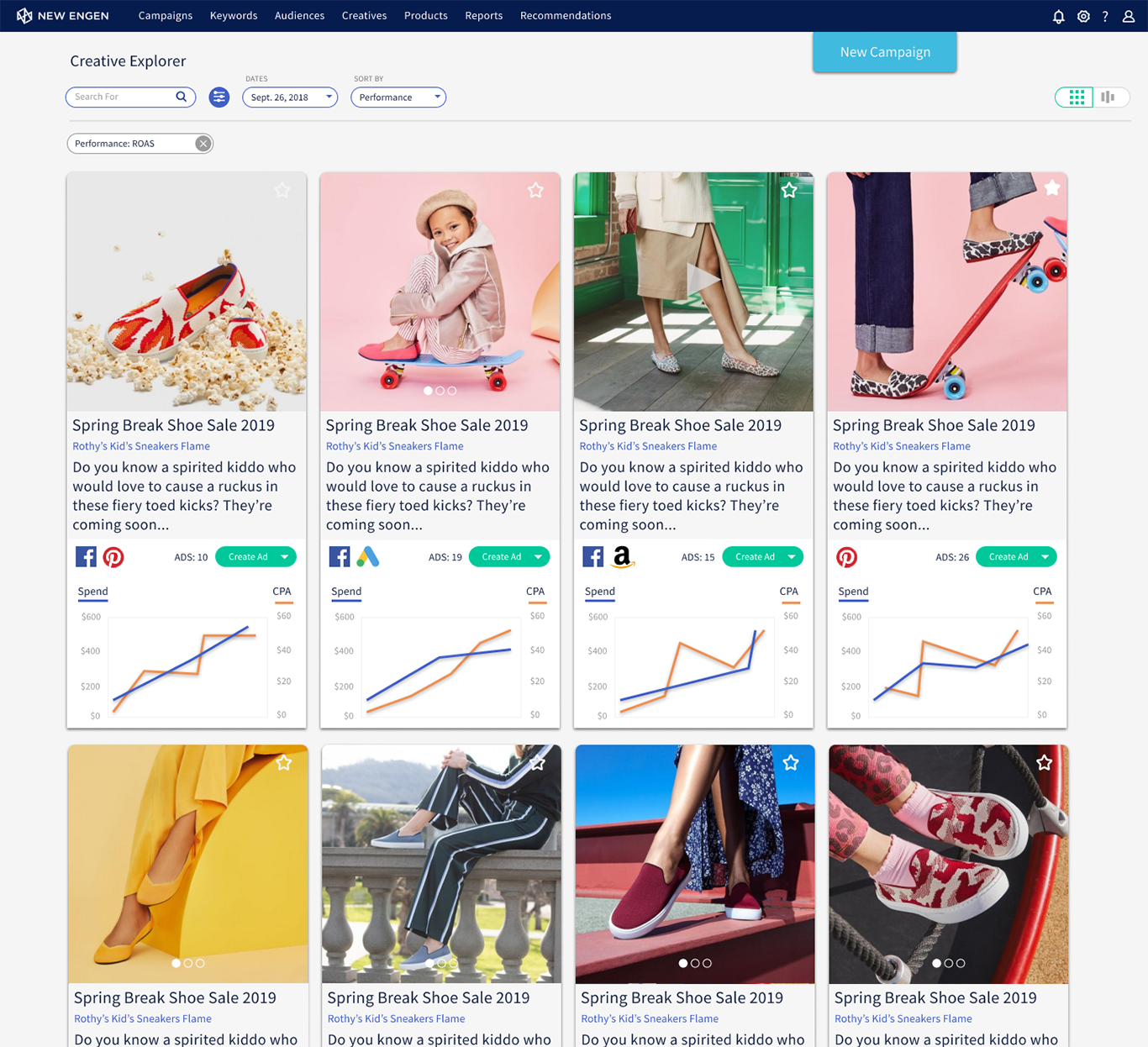

- Identified key metrics such as; CTR (click-through rate), Conversion Rate, CPC (cost per click), ROAS (return on ad spend) and Engagement Metrics (Likes, Shares, Time on Page, Impressions and Bounce Rate). We also highlighted the importance of Platform-specific Metrics (Search, Social, Shopping, Programmatic, Mobile) to tailor insights accurately.

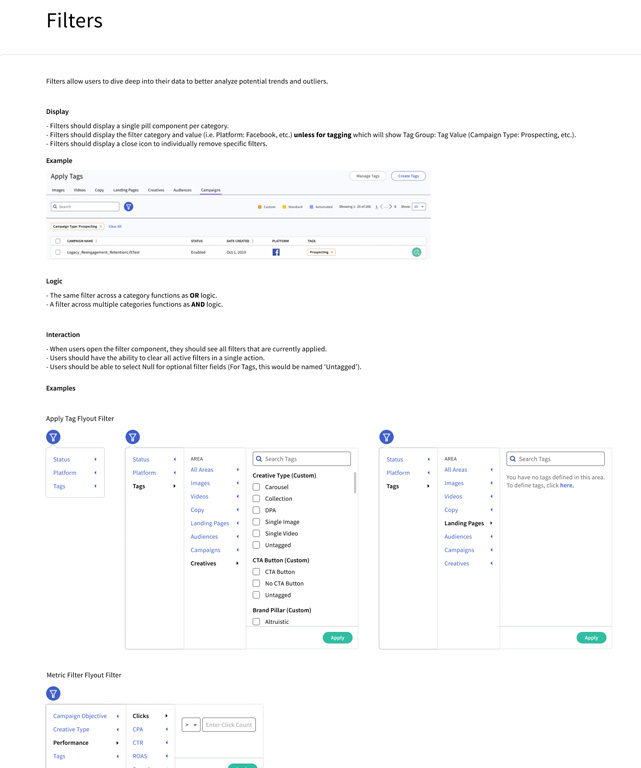

- Emphasized the importance of advanced searching and filtering to be able to find and parse large amounts of historical data.

- Explored ways to standardize reporting metrics and visualizations across different ad platforms to provide a cohesive view.

- Highlighted the importance of comparing individual ad performance against the fleet of other ads and campaigns.

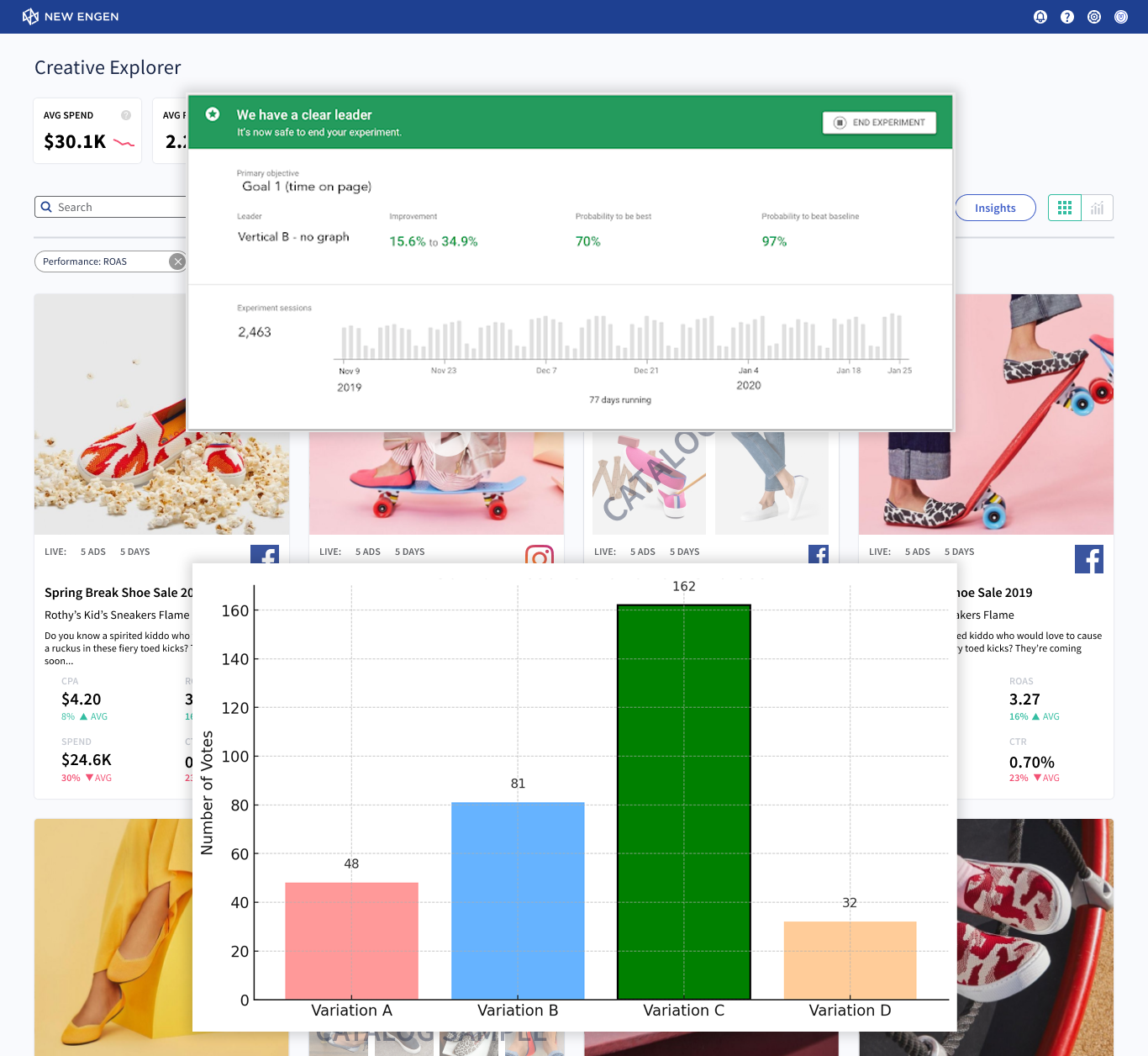

- Emphasized the need for real-time data updates and the ability for users to customize and save views/reports.

- Discussed how the tool could provide actionable insights & recommendations based on data trends. Integrating AI - Machine learning suggestions such as:

- adjusting ad spend based on different performance metrics

- targeting content tweaks & recommendations such as; too generic copy, image improvements, better cta etc.

- auto-tagging ads to improve performance

- suggesting ad variants to test headlines, copy, imagery, and call to action

- recommend negative keywords to increase ad efficiency

- review ads pre-launch for campaign consistency and platform compliance

- conduct a thorough responsive design test to ensure seamless UX across all devices

- alert when ad spend is over or under allocated budget and is properly algined with desired ROI

- rank the best to worst performing campaigns and ads across each platform

- adjusting bid modifiers to better target demographic segments, example:

- Women, Age 25-34: +30% bid modifier

- Men, Age 18-24: -10% bid modifier

- Household Income Top 10%: +20% bid modifier